|

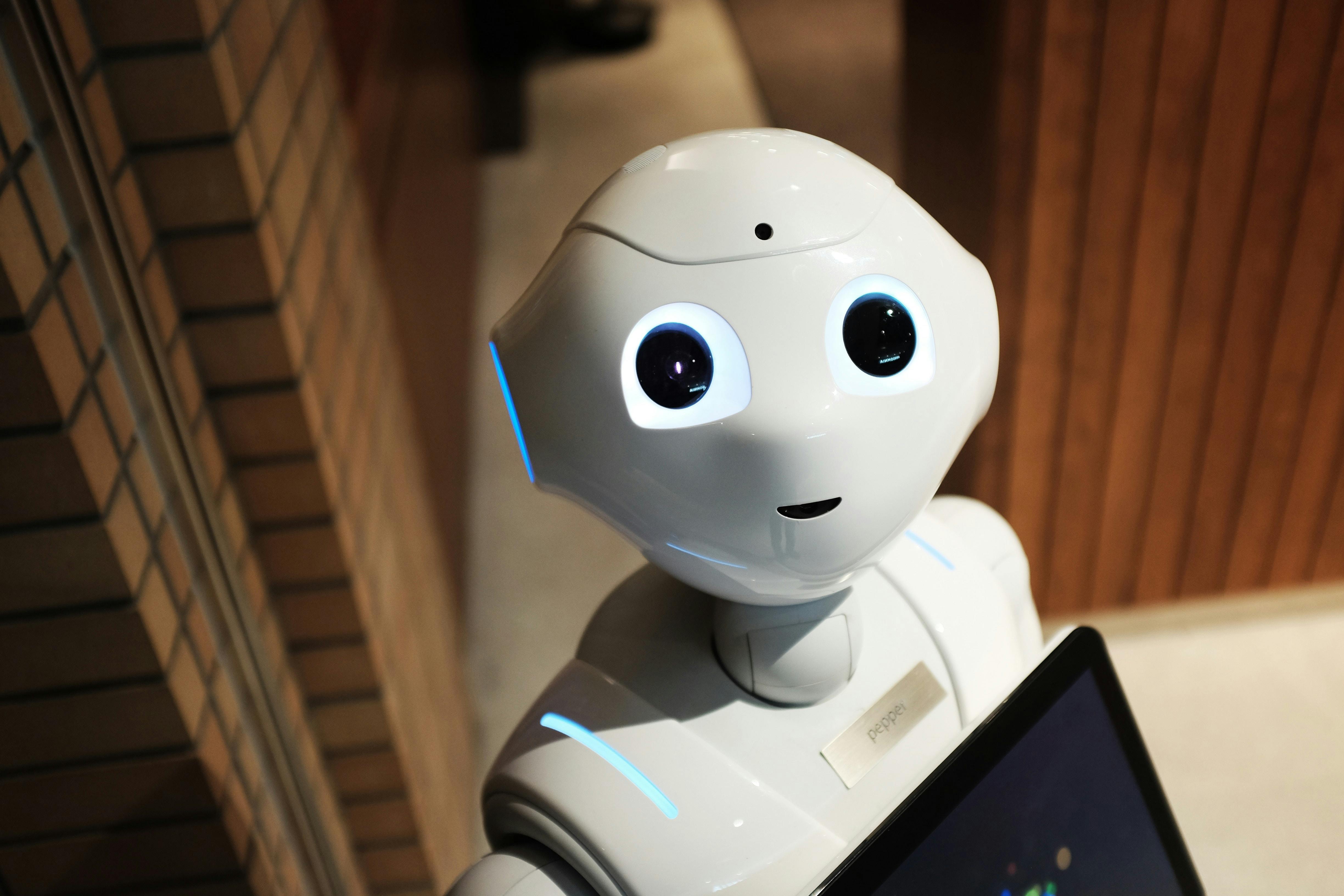

| https://www.pexels.com/photo/high-angle-photo-of-robot-2599244/ |

After working 9 years in the security industry, 9 years in data management and platform, and over 2 years in the LLM space, along with conducting numerous design thinking workshops, creating over a hundred slides, and interviewing dozens of customers and stakeholders, it has become clear that focusing solely on LLM performance and capabilities may not address the full picture. The real opportunity lies in ensuring quality data, security, and ethical use of these powerful tools.

Much time is spent benchmarking LLMs, comparing their performance on various tasks, and evaluating their capabilities. Significant resources are invested in prompt engineering, fine-tuning models, and optimizing their outputs. While these efforts are valuable, they can sometimes overshadow the equally important need for user experience and quality data.

It's equally important to invest considerable effort in MLOps, data engineering, and ensuring that the data feeding into these models is accurate, relevant, and up-to-date. This includes addressing data biases, ensuring compliance with regulations, and maintaining data security and privacy. These aspects are crucial for building trustworthy AI systems that users can rely on.

The quality assurance space is also evolving rapidly, with new tools and techniques emerging to validate and verify AI outputs.

- How can we ensure that the solution is not subjected to jailbreaks or prompt injections?

- How can we ensure that users don't inadvertently or deliberately use the system to generate harmful or biased content?

- How can we monitor and audit AI outputs to ensure they meet quality and ethical standards?

- How can we provide transparency and explainability in AI decision-making processes?

- How can we educate our developers about responsible AI use and best practices?

By addressing these questions and focusing on the broader aspects of AI deployment, we can create more robust, reliable, and user-friendly AI systems. It's not just about how well the LLM performs; it's about how well it serves the needs of its users while adhering to ethical and quality standards.

There's an interesting overlap between security and responsible AI that's worth exploring. Both fields share common goals of protecting users, ensuring trust, and maintaining integrity. By leveraging security principles and practices, we can enhance the responsible use of AI technologies.

Comments

Post a Comment